Bitcoin full validation sync performance

Testing full sync of five Bitcoin node implementations.

As I’ve noted many times in the past, running a fully validating node gives you the strongest security model and privacy model that is available to Bitcoin users. But are all node implementations equal when it comes to performance? I had my doubts, so I ran a series of tests.

The computer I chose to use as a baseline is high-end but uses off-the-shelf hardware. I wanted to see what the latest generation SSDs were capable of doing, since syncing nodes tend to be very disk I/O intensive.

We’ve also run tests on less high-end hardware such as the Raspberry Pi, the board we use in our plug-and-play Casa Node for Lightning and Bitcoin. We learned that syncing Bitcoin Core from scratch on one can take weeks or months (which is why we ship Casa Nodes with the blockchain pre-synced).

I bought this PC at the beginning of 2018. It cost about $2,000.

Just set up a maxed out @PugetSystems PC on PureOS 8.0.

— Jameson Lopp (@lopp) February 11, 2018

Core i7 8700 3.2GHz 6 core CPU

32 GB DDR4-2666

Samsung 960 EVO 1TB M.2 SSD

Synced Bitcoin Core 0.15.1 (w/maxed out dbcache) in 162 min w/peak speeds of 80 MB/s. Next step: see how much traffic I can serve on gigabit fiber. pic.twitter.com/QOvuwPgCgy

I ran a few syncs back in February but nothing comprehensive across different implementations. When I ran another sync in October I was asked to test the performance when running the non-default full validation of all signatures.

Note that no Bitcoin implementation strictly fully validates the entire chain history by default. As a performance improvement, most of them don’t validate signatures before a certain point in time, usually a year or two ago. This is done under the assumption that if a year or more of proof of work has accumulated on top of those blocks, it’s highly unlikely that you are being fed fraudulent signatures that no one else has verified, and if you are then something is incredibly wrong and the security assumptions underlying the system are likely compromised.

Test results

It took 311 minutes to sync Bitcoin Core 0.17 from genesis with assumevalid=0 on this machine; bottlenecked on CPU most of the time from what I could tell.

— Jameson Lopp (@lopp) October 24, 2018

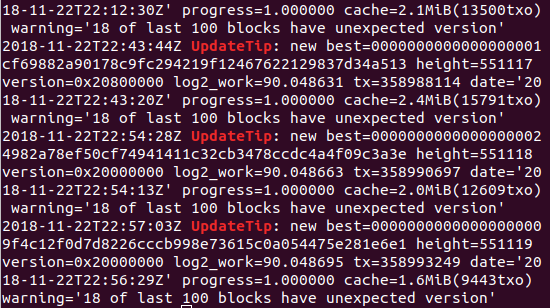

Testing Bitcoin Core was straightforward — I just needed to add the assumevalid parameter. My bitcoind.conf:

assumevalid=0

dbcache=24000

maxmempool=1000

Full validation sync of @Bcoin 1.0.2 with 1GB UTXO cache finished in 27 hours, 32 minutes. If you try to allocate more than 1GB to the UTXO cache, you're gonna have a bad time. NodeJS default heap size of 1.7GB causes lots of issues, is hard to work around.

— Jameson Lopp (@lopp) November 3, 2018

Bcoin took a lot more work. I have regularly run Bcoin nodes but I haven’t spent a lot of time messing with the configuration options, nor had I ever tried a full validation sync. I ended up filing several github issues and had to try syncing about a dozen different times before I found the right parameters that wouldn’t cause the NodeJS process to blow heap and crash. My bcoin.conf:

checkpoints=false

coin-cache=1000

Along with: export NODE_OPTIONS= — max_old_space_size=16000

Full validation sync of Libbitcoin Node 3.2.0 took my machine 20 hours, 24 minutes with a 100,000 UTXO cache. Requires manually removing checkpoints from the code & recompiling for full validation. Expect version 4.0 to be much faster due to implementing headers-first sync.

— Jameson Lopp (@lopp) November 5, 2018

Libbitcoin Node wasn’t too bad — the hardest part was removing the hardcoded block checkpoints. In order to do so I had to clone the git repository, checkout the “version3” branch, delete all of the checkpoints other than genesis from bn.cfg, and run the install.sh script to compile the node and its dependencies.

I did have to try twice to sync it, because unlike every other implementation with a UTXO cache, Libbitcoin’s cache parameter is in units of UTXOs rather than megabytes of RAM. So the first time I ran a sync I set it to 60,000,000 UTXOs in order to keep them all in RAM — under the assumption that my 32GB of RAM would be sufficient since Bitcoin Core uses less than 10GB to keep the entire UTXO set in memory. Unfortunately Libbitcoin Node ended up using all of my RAM and once it started using the swap, my whole machine froze. After chatting with developer Eric Voskuil he noted that a UTXO cache greater than 10,000 would have negligible impact, so I ran the second sync with it set to 100,000 for good measure. My libbitcoin config:

[blockchain]

# I set this to the number of virtual cores since default is physical cores

cores = 12

[database]

cache_capacity = 100000

[node]

block_latency_seconds = 5

Full validation sync of btcd 0.12.0 took my machine 3 days, 23 hours, 12 minutes. CPU-bound the entire time.

— Jameson Lopp (@lopp) November 10, 2018

BTCD was fairly straightforward — it completed on the first sync. However, as far as I can tell it doesn’t have a configurable UTXO cache, if it has one at all. My btcd.conf:

nocheckpoints=1

sigcachemaxsize=1000000

Tried to sync @ParityTech's Bitcoin implementation.

— Jameson Lopp (@lopp) November 1, 2018

Got to block 481824 (SegWit activation) after 21 hours, 7 min.

Block 481824 verification failed with error "WitnessInvalidNonceSize"

Looks like Parity has a SegWit consensus bug.

Marking sync time as ∞

The first Parity Bitcoin sync attempt didn’t go well. After Parity fixed the consensus bug I tried again.

Recompiled Parity Bitcoin with the SegWit bug fix.

— Jameson Lopp (@lopp) November 12, 2018

Full validation sync with 24GB dbcache and "verification edge" set to block 1 took 38 hours, 17 minutes, was disk I/O bound most of the time.

Though once it got into the “full block” territory of the blockchain (after block 400,000) it slowed to 2 blocks per second. CPU usage was only about 30% though it was using a lot of the cache I made available — 17GB worth! But for some reason it was still slower than I expected — a look at the disk I/O activity showed it to be the culprit. For some reason it was constantly churning 50 MB/s to 100 MB/s in disk writes even though it was only adding about 2 MB/s worth of data to the blockchain. It kept slowing down after SegWit activation such that after block 500,000 it was only processing about 0.5 blocks per second. CPU, RAM, and bandwidth usage were about the same, so I checked disk I/O again. At this point there was very little write activity but it was constantly doing about 30 MB/s worth of disk reads even though there was 4GB of cache left that was not being used. There are probably some inefficiencies in Parity’s internal database (RocksDB) that are creating this bottleneck. I’ve had issues with RocksDB in the past while running Parity’s Ethereum node and while running Ripple nodes. RocksDB was so bad that Ripple actually wrote their own DB called NuDB. My pbtc config:

— btc

— db-cache 24000

— verification-edge 00000000839a8e6886ab5951d76f411475428afc90947ee320161bbf18eb6048

I ran a comparison sync of Bitcoin Knots 0.16.3 to height 547100 and it took 327 minutes. I bet Knots 0.17 would be even closer to 311 minutes, though it's already inside what I'd consider the margin of error. Difference is negligible. https://t.co/GqqxkY1G0o

— Jameson Lopp (@lopp) December 4, 2018

Bitcoin Knots is a fork of Bitcoin Core, so I didn’t expect the performance to be much different. I didn’t test it originally but after posting this article several folks requested a test, so I ran it, also with bitcoind.conf:

assumevalid=0

dbcache=24000

maxmempool=1000

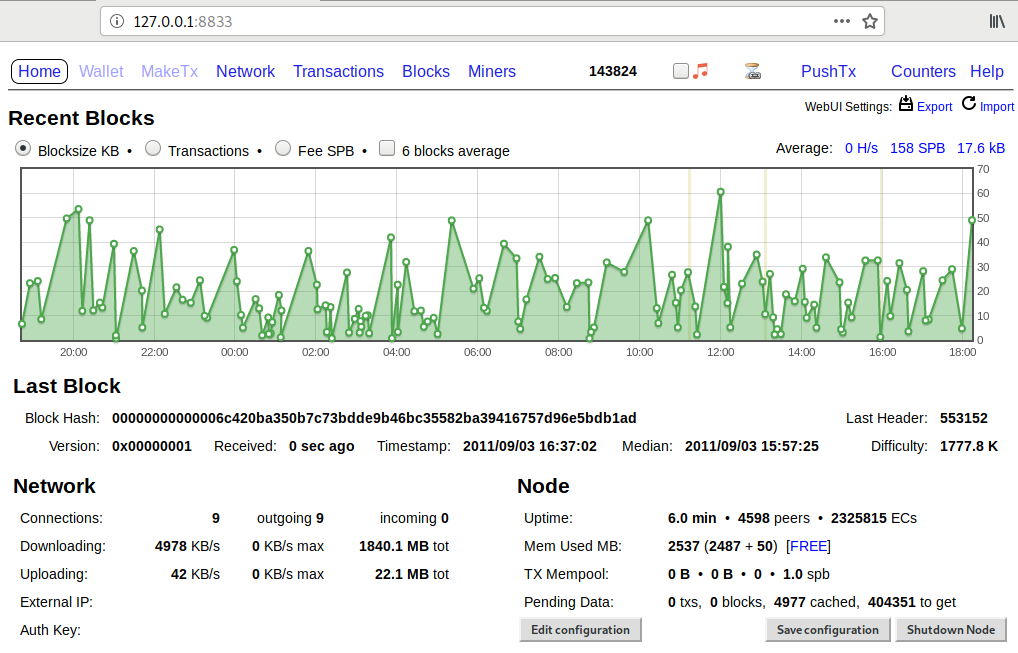

Full validation sync of Gocoin 1.9.5 with secp256k1 library enabled took my machine 12 hours, 32 minutes to get to chain tip. Unfortunately, after syncing the node crashes with a divide by zero error. https://t.co/LjDVK7DdNs

— Jameson Lopp (@lopp) December 10, 2018

After initially publishing this post, someone recommended that I test Gocoin. I had heard of this client a few times before, but it rarely comes up in my circles. I did manage to find a critical bug while testing it, but the developer fixed it within a few hours of being reported. Gocoin touts itself as a high performance node.

It keeps the entire UTXO set in RAM, providing the best block processing performance on the market.

After reading through the documentation, I made the following changes to get maximum performance:

- Installed secp256k1 library and built gocoin with sipasec.go

- Disabled wallet functionality via

AllBalances.AutoLoad:false

My gocoin.conf:

LastTrustedBlock: 00000000839a8e6886ab5951d76f411475428afc90947ee320161bbf18eb6048

AllBalances.AutoLoad:false

UTXOSave.SecondsToTake:0

Not only was Gocoin fairly fast at syncing, it is the only node I’ve come across that provides a built-in web dashboard!

Performance Rankings

- Bitcoin Core 0.17: 5 hours, 11 minutes

- Bitcoin Knots 0.16.3: 5 hours, 27 minutes

- Gocoin 1.9.5: 12 hours, 32 minutes

- Libbitcoin Node 3.2.0: 20 hours, 24 minutes

- Bcoin 1.0.2: 27 hours, 32 minutes

- Parity Bitcoin 0.? (no release): 38 hours, 17 minutes

- BTCD 0.12.0: 95 hours, 12 minutes

Exact comparisons are difficult

While I ran each implementation on the same hardware to keep those variables static, there are other factors that come into play.

- There’s no guarantee that my ISP was performing exactly the same throughout the duration of all the syncs.

- Some implementations may have connected to peers with more upstream bandwidth than other implementations. This could be random or it could be due to some implementations having better network management logic.

- Not all implementations have caching; even when configurable cache options are available it’s not always the same type of caching.

- Not all nodes perform the same indexing functions. For example, Libbitcoin Node always indexes all txs by hash — it’s inherent to the database structure. Thus this full node sync is more properly comparable to Bitcoin Core with the tx indexing option enabled.

- Operating and File system differences can come into play. One of my colleagues noted that when syncing on ZFS with spinning disks, Bitcoin Core performed better with a smaller dbcache. He reasoned that ZFS + Linux are very good at optimizing what data is cached/buffered, and the bitcoind DB caches ended up wasting more RAM caching unnecessary data, which meant that the overall amount of IO needed on the spinning disks was higher with a larger dbcache.

Room for improvement

I’d like to see Libbitcoin Node and Parity Bitcoin make it easier to perform a full validation with a single parameter rather than having to dig through code and recompile without checkpoints or set a checkpoint at block 1 in order to override default checkpoints.

With Bcoin I had to modify code in order to raise the cache greater than 4 GB. I also had to set environment variables to increase the default NodeJS heap size which defaults to 1.5GB in v8, though after a ton of experimentation it wasn’t clear to me why bcoin kept blowing heap even when I had heap set to 8GB and the UTXO cache set to 4, 3, or even 2 GB. Bcoin should implement sanity checks that ensure a node operator can’t set a config that will crash the node due to blowing heap.

Another gripe I have that is nearly universal across different cryptocurrencies and implementations is that very few will throw an error if you pass an invalid configuration parameter. Most silently ignore them and you may not notice for hours or days. Sometimes this resulted in me having to re-sync a node multiple times before I was sure I had the configuration correct.

On a similar note, I think that every node implementation should generate its default config file on first startup, even if the file is empty. This way the node operator knows where the config options should go — more than once I’ve created a config file that was named incorrectly and thus was ignored.

As a side note, I was disappointed with the performance of my Samsung 960 EVO which markets sequential read speeds of 3,200 MB/s and write speeds of 1,900 MB/s. If you read their fine print the numbers are based on “Intelligent TurboWrite region” specs, after which the numbers drop drastically. In my real world testing with these node implementations I rarely saw read or write speeds exceed 100 MB/s. The question of how much disk activity is sequential and how much is random also comes into play, though I’d expect most of the writes to be sequential.

In general my feeling is that not many implementations have put much effort into optimizing their code to take advantage of higher end machines by being greedier with resource usage.

Altcoin tests

Just for fun, I tried a few other popular non-Bitcoin-derivative cryptocurrencies.

Full validation sync of @ParityTech 2.1.3 now takes 5,326 minutes (3.7 days) on this machine. I increased cache to 24GB RAM and it peaked at 23GB. Disk I/O is the bottleneck, with over 22TB read and 20TB written in total. 5X longer only 8 months later.https://t.co/N1hBLRMlN6

— Jameson Lopp (@lopp) October 28, 2018

I’ve been tracking Parity’s full validation sync time for a while now, ever since I started running Parity nodes at BitGo. Unfortunately it seems that the data being added to the Ethereum blockchain is significantly outpacing the rate at which the implementation is being improved.

Some folks wanted a comparison full validation sync of geth and I'm happy to oblige. Unfortunately it's stuck at block 3804607 with an "invalid gas used (remote: 1329607 local: 1269910)" error. Looks like I'm not alone. Marking this sync time down as: ∞. https://t.co/q9LTVQyFPj

— Jameson Lopp (@lopp) October 30, 2018

Geth seems to also have a consensus bug, which is quite surprising given this implementation’s popularity. I can only assume that very few folks try to run it in full validation mode. Nevertheless, it’s amazing that this critical consensus bug was reported 4 months ago but still hasn’t been fixed…

I’ll have to try Geth again after the bug is fixed since the developers appear to have made some significant disk I/O optimizations.

#golang #Ethereum optimization waiting for merge (blue = master, purple = proposal, mainnet head) 😍 pic.twitter.com/3KK812C7ki

— Péter Szilágyi (@peter_szilagyi) November 13, 2018

Several weeks later the code was released and my test showed a significant improvement.

Geth fixed the consensus bug I hit during earlier testing and drastically reduced disk I/O usage for their 1.8.19 release. Full validation chain sync takes my machine 4 days, 6 hours, 21 min - now bottlenecked on CPU instead of disk. About 12 hours slower than Parity 2.1.3.

— Jameson Lopp (@lopp) December 9, 2018

I had to run monerod twice because I assumed that the config file was monero.conf whereas it should have been bitmonero.conf.

Full validation sync of @monero 0.13.0.4 on my beast machine took 20 hours 16 min w/ non-default configs. My earlier tweet claiming 168 minutes was incorrect; that was using default checkpoints. On bright side, looks like better networking logic could yield nearly 10X speedup.

— Jameson Lopp (@lopp) October 29, 2018

The really cool thing about monerod is that is has an interactive mode that you can use to change the configuration of the node while it is running. This was really helpful for performance testing and I’m not aware of any other crypto node implementations that have this kind of interactive configuration.

Full validation sync of zcashd 2.0.1 took my machine 6 hours 11 minutes with dbcache=24000. Zcash was CPU bound the entire time, used a max of 5.3GB RAM. In terms of data processing performance (20GB in 6 hours), this is about 10% the speed of Bitcoin Core (200GB in 5 hours.)

— Jameson Lopp (@lopp) December 4, 2018

I expected Zcash would be somewhat fast due to having a small blockchain but somewhat slow due to the computationally expensive zero knowledge proofs and due to being based off of Core 0.11. Looks like they balanced out.

Finally, I considered running a sync of Ripple just for fun but then I read the documentation and decided it didn’t sound fun after all.

At the time of writing (2018–10–29), a rippled server stores about 12GB of data per day and requires 8.4TB to store the full history of the XRP Ledger.Conclusion

Given that the strongest security model a user can obtain in a public permissionless crypto asset network is to fully validate the entire history themselves, I think it’s important that we keep track of the resources required to do so.

We know that due to the nature of blockchains, the amount of data that needs to be validated for a new node that is syncing from scratch will relentlessly continue to increase over time.

The hard part is ensuring that these resource requirements do not outpace the advances in technology. If they do, then larger and larger swaths of the populace will be disenfranchised from the opportunity for self sovereignty in these systems.

Keeping track of these performance metrics and understanding the challenges faced by node operators due to the complexities of maintaining nodes helps us map out our plans for future versions of the Casa Node.

How can I get a Casa Node?

Are you a Casa client?

Good news! You receive a Casa Node as part of your membership!

Not a Casa client?

That’s ok! You can order with Bitcoin or credit card.

We’re excited to bring easy Lightning Network access to everyone!